· culture · 7 min read

Mechanical Shadows: The Impact of 80s and 90s Robots on Today's AI Culture

From R2-D2’s resourceful chirps to Data’s quiet quest for personhood, robots of the 1980s and 1990s shaped how we imagine and interact with artificial intelligence today. This essay traces the archetypes, design cues, ethical debates and research questions those mechanical characters cast forward - and how their mechanical shadows still inform modern robotics, AI policy and popular expectations.

A short prologue: why old robots still matter

When audiences in the 1980s and 1990s watched metal limbs move and synthetic voices speak, they weren’t only being entertained - they were being taught. Characters such as R2‑D2, Data and the cinema’s darker machines built a vocabulary for thinking about artificial minds: what they can feel, who they can be, and how we should treat them. Those portrayals seeped into engineering aims, design choices, public fears and policy debates that define our AI culture today.

Below I trace the major archetypes that dominated 80s/90s robot fiction, show how each cast a long psychological and technological shadow, and point to concrete ways those shadows shape current robotics, human–computer interaction and ethical discourse.

What the 80s and 90s gave us: five dominant robot archetypes

Companion/helper (the lovable assistant)

- Example - R2‑D2 (Star Wars) - resourceful, compact, expressive despite being non‑verbal. See the R2‑D2 page for context:

- Impact - established that robots could be friendly, emotionally engaging and trusted partners rather than just tools.

The earnest android seeking personhood

- Example - Lieutenant Commander Data (Star Trek: The Next Generation) - curious, moral, determined to understand humanity. See Data and the landmark episode “The Measure of a Man”:

- Impact - reframed robots as potential moral agents, fueling debates about rights, agency and legal personhood.

The uncanny human-replacement (and the horror variant)

- Example - The replicants of Blade Runner (1982) and the T‑800 in The Terminator (1984) - machines rendered dangerously humanlike or indistinguishable from humans. See

- Impact - fed our fear of machines that imitate humanity too well - the intuition later formalized as the “uncanny valley” (

The law‑and‑order cybernetic enforcer

- Example - RoboCop (1987) - a human body fused with corporate tech and law enforcement authority:

- Impact - prompted early, visceral debates about militarized or police robots and corporate control of intelligent systems.

The tender giant / moral mirror

- Example - The Iron Giant (1999) - a large, non‑threatening machine whose story examines violence, choice and empathy:

- Impact - showed the public a powerful counter-narrative to killer‑robot tropes: machines can choose empathy over aggression.

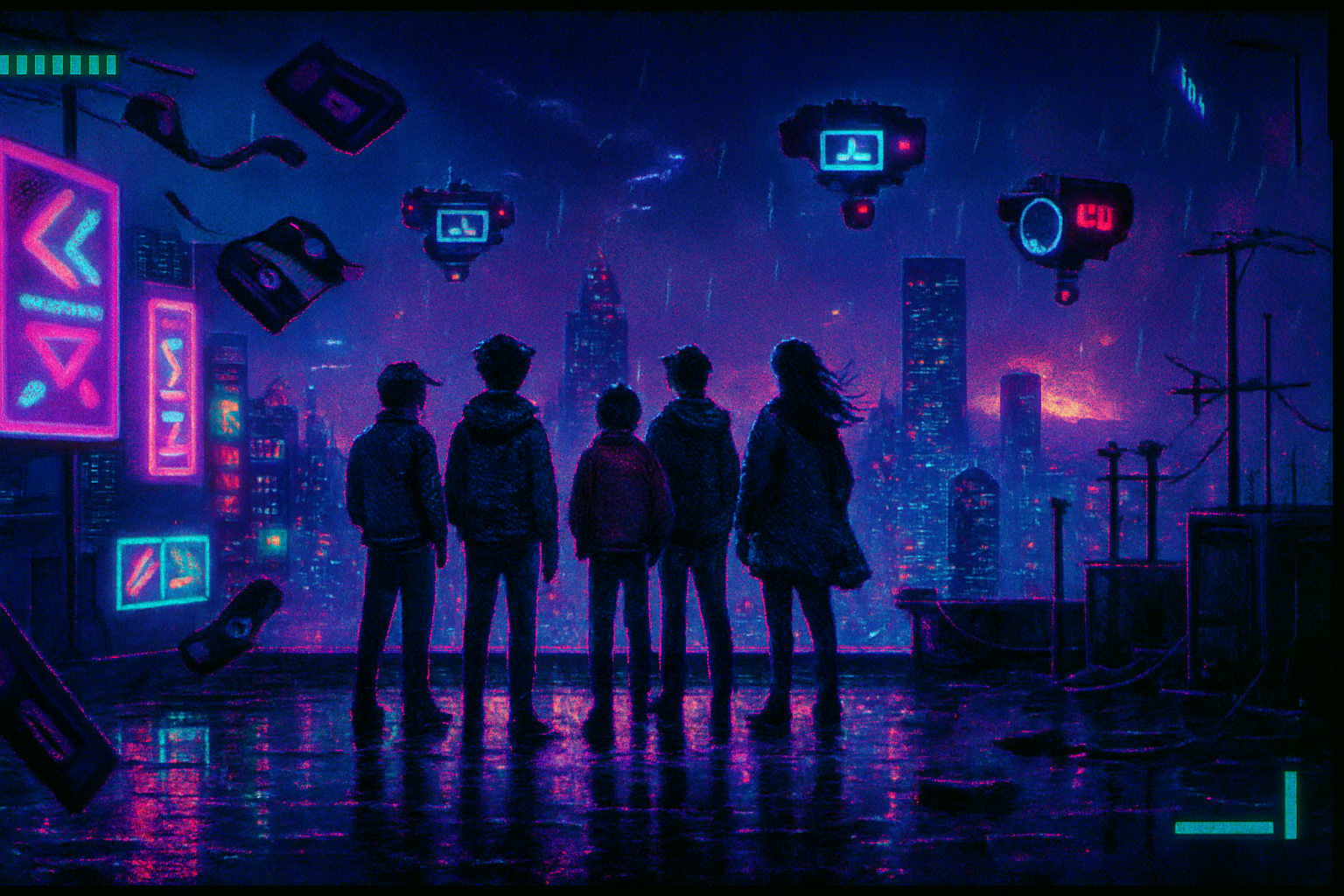

Plus, decades of anime and cyberpunk - notably Ghost in the Shell (1995) - asked harder questions about identity, embodiment and consciousness in an increasingly networked world: https://en.wikipedia.org/wiki/Ghost_in_the_Shell

How fiction shaped design language and user expectations

The emotional cues and physical designs of 80s/90s robots influenced real‑world engineers and designers in concrete ways:

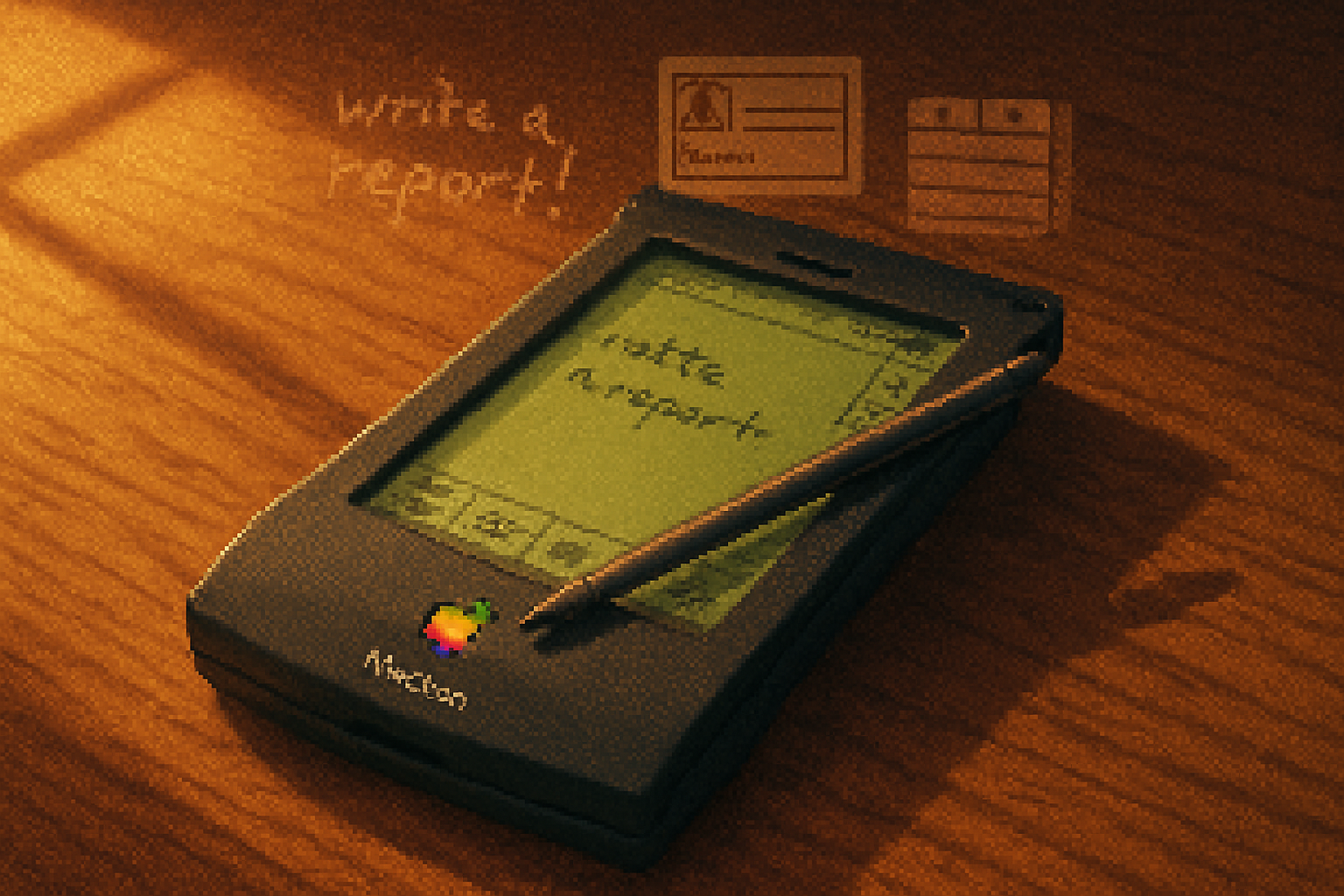

Anthropomorphism by design - small, domed, or rounded shapes (think R2‑D2) and expressive faces made robots easier to accept. Designers of social robots later adopted these patterns to invite trust and reduce anxiety. Social robots such as SoftBank’s Pepper and consumer-oriented companions often rely on those same friendly cues.

Nonverbal expressivity - R2‑D2’s chirps or Data’s pauses taught audiences to read mechanical gestures and silences as meaningful. This guided human–robot interaction (HRI) research toward multimodal communication (tone, posture, lights) rather than text alone.

Scale and movement - The Iron Giant and similar characters influenced thinking about how scale communicates intent - slow, deliberate motion for benign giants; jerky, precise motion for unsettling machines.

These cinematic vocabularies are visible in modern platforms. Robots like Boston Dynamics’ Spot appear intentionally animal‑like, while social robots adopt rounded shapes and expressive LEDs to communicate internal states in a human‑readable way.

From story beats to research questions: academic and engineering echoes

Fiction didn’t just give aesthetics - it primed entire lines of inquiry:

Personhood and rights - Data’s quest for legal recognition (“The Measure of a Man”) is often cited in ethics discussions about robot rights and the legal status of advanced AI. Research into robot autonomy, responsibility and legal frameworks frequently references these narrative thought‑experiments.

Emotional attachment and care - Stories where humans bond with machines (e.g.,

Trust, predictability and safety - Killer‑robot narratives focused engineers on fail‑safe design, transparency and explainability - practical concerns that matured into modern research agendas in AI safety and interpretable machine learning.

The uncanny valley and realism - The discomfort around near‑human robots, observed and theorized in the 1970s and reinforced by 80s/90s media, pushed researchers to study how appearance and motion together influence social acceptance (see

Ethical debates: how fiction primed public imagination and policy

Popular narratives from the era did more than define expectations; they set emotional registers for debates policymakers and the public would later have:

Militarization - The image of unstoppable killer machines created public unease around autonomous weaponry long before drones and lethal autonomy were technical realities. This cultural memory affects how civilians view military AI projects today.

Corporate control and surveillance: RoboCop’s fusion of policing and corporate interests feeds skepticism about privatized AI and surveillance infrastructure - skepticism that reappears in critique of data collection and predictive policing.

Rights and responsibilities - Data’s courtroom drama provided a digestible frame for discussing whether a sophisticated machine could be owed rights or bear duties - a question now emerging in legal circles dealing with autonomous systems.

In short, fiction distilled complex ethical dilemmas into memorable parables that still influence public sentiment toward AI policy.

Concrete threads from fiction to today’s AI products

Companion archetype → consumer social robots and home assistants. The friendly helper image (R2‑D2, short circuit’s Johnny 5) arguably smoothed public acceptance for digital assistants (Siri, Alexa) and early social robots (Jibo, Kuri).

Data’s longing → current talk of general intelligence and ‘alignment’. Data made abstract questions about consciousness and moral agency emotionally accessible; today, similar questions power debates over whether advanced AI should have rights or whether we should pursue artificial general intelligence at all.

Killer/weapon tropes → research into AI safety and governance. Terminator‑style scenarios amplified support for precautionary approaches in AI safety, transparency and regulation.

Visual/behavioral design cues → HRI best practices. The work of making robot intent legible to humans (using movement, color, sound) mirrors techniques first popularized by film and TV.

Nostalgia as cultural leverage (and a marketing tactic)

Nostalgia for 80s/90s robots is a powerful marketing and cultural lever. Startups and entertainment franchises lean on retro iconography to signal trustworthiness or to evoke a simpler, more optimistic era of technology. But nostalgia can also obscure risks: a fond cultural memory of benevolent machines may downplay legitimate concerns about surveillance, bias and misuse.

Two caveats: complexity and responsibility

Fiction is a simplifier. Movies and TV compress ethical nuance for drama. Engineers and policymakers must translate those narratives into properly detailed risk assessments, not just moral parables.

Media influence is bidirectional. While fiction shapes public expectations, real advances in robotics and AI also feed back into storytelling. The relationship is iterative - each influences the other.

Where culture meets code: what we should keep in mind

Designers should borrow the empathy of filmic robots (expressivity, legibility) while resisting the temptation to weaponize anthropomorphism to obscure limits or collect more data.

Educators and communicators can use familiar archetypes as entry points for public conversations about ethics and governance - but they should couple those narratives with clear technical explanations.

Policymakers should recognize the emotional framing that fiction creates and commission empirical work to align popular concerns with actual risk profiles.

Reading list / sources and further context

- R2‑D2 (Star Wars): https://en.wikipedia.org/wiki/R2-D2

- Data and “The Measure of a Man”: https://en.wikipedia.org/wiki/Data_(Star_Trek) and https://en.wikipedia.org/wiki/The_Measure_of_a_Man_(Star_Trek:_TNG)

- The Terminator: https://en.wikipedia.org/wiki/The_Terminator

- RoboCop: https://en.wikipedia.org/wiki/RoboCop

- The Iron Giant: https://en.wikipedia.org/wiki/The_Iron_Giant

- Ghost in the Shell: https://en.wikipedia.org/wiki/Ghost_in_the_Shell

- Uncanny valley (foundational idea for near‑human discomfort): https://en.wikipedia.org/wiki/Uncanny_valley

- Kate Darling - TED Talk on why we respond to robots like people (research on empathy for robots): https://www.ted.com/talks/kate_darling_why_we_respond_to_robots_like_people

For deeper reading, look into human–robot interaction (HRI) literature and popular analyses in outlets such as IEEE Spectrum, The Atlantic and Wired that explore the interplay between science fiction and technological development.

Final note: mechanical shadows are also invitations

The machines of the 80s and 90s left us a mixed inheritance: hope, fear, curiosity, and a surprisingly detailed imagination of what intelligent machines could be. Those cultural legacies continue to guide engineers, ethicists and the public - sometimes helpfully, sometimes misleadingly. Recognizing these mechanical shadows gives us the chance to choose which echoes we amplify and which we revise as actual AI arrives.